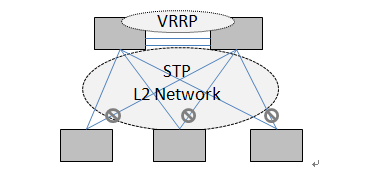

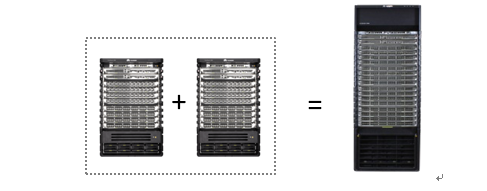

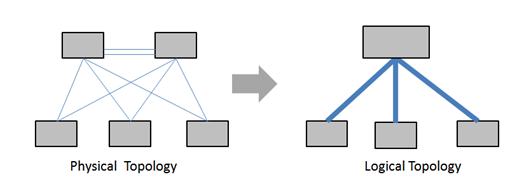

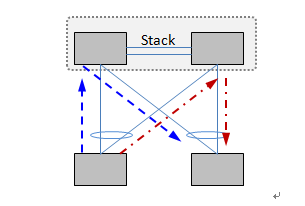

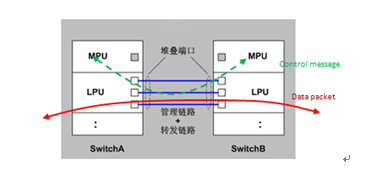

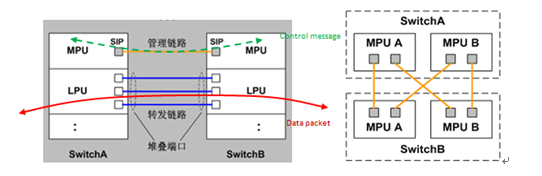

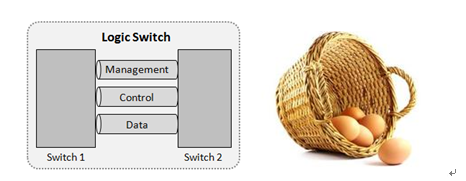

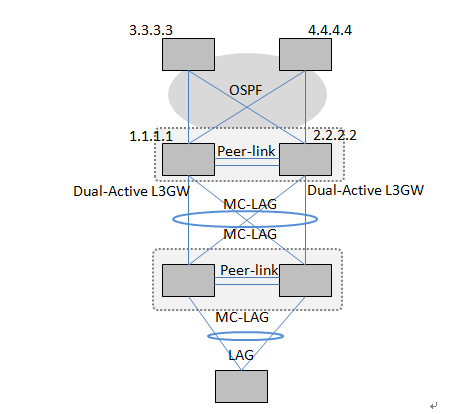

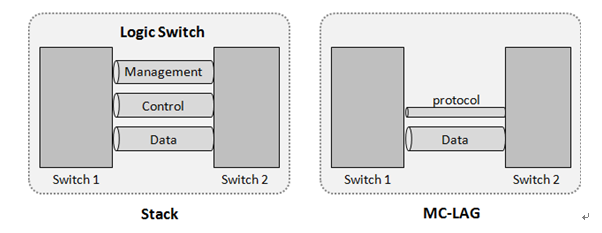

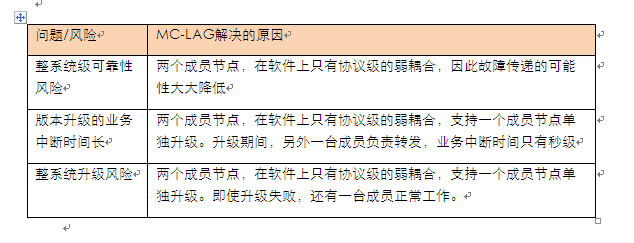

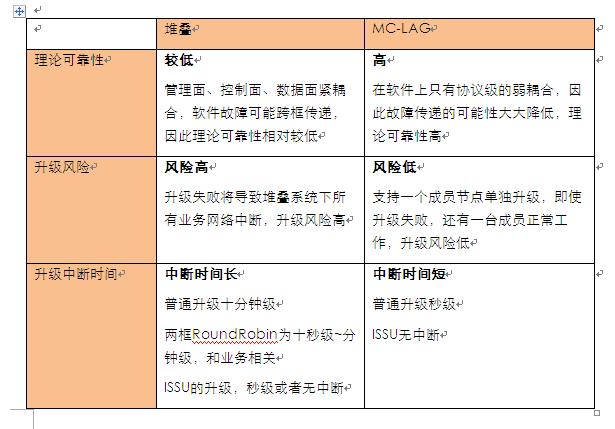

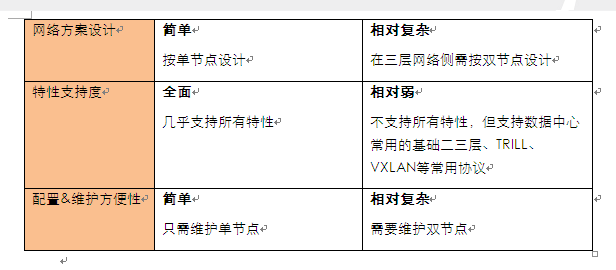

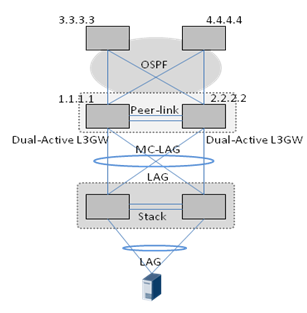

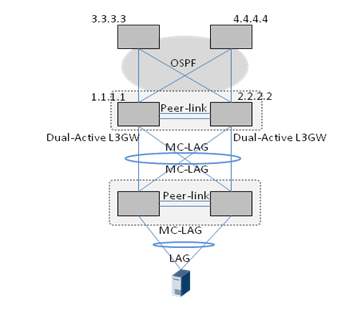

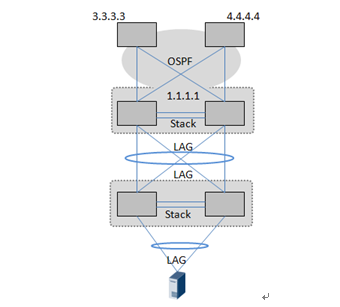

Virtualization technology is a buzzword in the data center, with horizontal virtualization, vertical virtualization, virtual virtualization, NVO3 virtualization, and more. Today, I would like to talk about horizontal virtualization with you. Take Huawei CloudEngine 12800 series as an example, let friends know the origin and development history of this technology, introduce the characteristics of various horizontal virtualization technologies in a simple way, and the various scenarios. Choose a strategy. The origin of horizontal virtualization cluster In the early days of data center network development, there was no dedicated data center switch. What should I do? Take the campus switch first, use the most traditional VRRP + STP, make do with it, is the classic campus network below. This network model reveals a classic, reliable park taste. It will take a long time, the problem will come: The traffic is getting larger and larger, and the STP blocking leads to low link utilization. Non-shortest path forwarding, the root of the tree has a bandwidth bottleneck, and the forwarding delay is large; VRRP single-active gateway, the standby node device is idle; The STP network is limited in size and has poor convergence performance. There are many management nodes, complex logical topology, and troublesome maintenance. These problems brought the demands of horizontal virtualization, and the cluster of cluster switches took the lead. Stacking A typical chassis switch stack includes CISCO's VSS (Virtual Switch System), Huawei's CSS (Cluster Switch System), and H3C's IRF (Intelligent Resilient Framework). VSS, CSS, and IRF are all stacked in nature, but they only wear different vests. Of course, manufacturers have also developed some differences. This is a postscript. The stacking technology is essentially a combination of management plane, control plane, and forwarding plane. The main control board of the stack system manages all the line cards and stencils of the two physical devices and becomes a logical large switch. However, it should be noted that the purpose of stacking is not only to make it bigger, but also to look at the logical topology from a network perspective, and become "high and handsome"! The main performance of "Gao Fu Shuai": Super node with almost twice the exchange capacity; Layer 2 and Layer 3 forwarding traffic is fully load-sharing, making full use of all links; Logical single node, comprehensive business support, simple network design; Supports fault protection of physical nodes by deploying cross-frame link-agg; The network element is one-in-one, which is beneficial to network management and maintenance. There are also a lot of benefits: Shortest path forwarding, low latency; Compared with traditional STP, a larger Layer 2 network can be formed. The convergence performance of link-agg, the network failure convergence block. In a stack system, the bandwidth of the stack link is always insufficient compared to the service port. This requires that the forwarded traffic is avoided as much as possible through the stacking link. This is called traffic local prioritized forwarding. As shown in the above figure, the Huawei data center switch stacking system supports local priority for Layer 3 ECMP and link bundling. Local priority forwarding saves the bandwidth of the stack link and also reduces the forwarding delay. In addition to the above-mentioned general stacking technology, Huawei CloudEngine 12800 series data center high-end switches have also made significant physical optimization for stack reliability. Stack optimization Reliability optimization (transfer separated stack) The transfer-controlled separation stack, also known as the out-of-band stack, is primarily optimized for high reliability. In most stack switches in the industry, one channel is used for both the control channel and the forwarding channel between stack members. Huawei's CloudEngine 12800 series data center switches have ingeniously developed a stacking system with separate control and separation. Here, "transfer" refers to the service data forwarding channel; "control" refers to the control message (also called "signaling") channel. In a traditional frame stacking system, the service data channel and the control message channel all use the same physical channel, that is, the stack link. As shown below: In such a stacking system, control messages and data are mixed and run together. If the data traffic of the stacking channel is large, the control message may be damaged and lost, thereby affecting the reliability of the control plane. Strictly speaking, this design does not meet the design requirements of “data, control, and management plane separationâ€. In addition, the establishment of the stacking system relies on the startup of the line card, resulting in an increase in software complexity and affecting the startup speed of the stack. The switch-to-separate stacking system uses the architecture shown below: This hardware stacking architecture brings a range of reliability improvements: The control message channel and the service data channel are physically isolated to ensure that the service data does not affect the control message; Triple dual-fault protection, including stack management link (4 channels), stack forwarding link (at least 2 channels), service port/management port DAD; The stacking system is established, no longer depends on the startup of the line card, no software timing dependency, simplifying software implementation, and simple means reliable; The stacking system is established, no longer waiting for the line card/network board to start, and shortening the stacking system establishment time; The control message channel has a short path, fewer fault points, and low latency. Limitations of stacking improvements Stacking systems bring the benefits of the aforementioned series, but slowly, unpleasant problems are gradually exposed, which is determined by the nature of the stacking principle. As shown in the figure above, the two switches form a logical switch through the tight coupling of the management plane, control plane, and data plane. This leads to the following three risks or problems. System level reliability risk For common faults, the stack system can complete fault protection through link switching, active/standby switchover, and frame switchover. However, since the two physical switches of the entire system are tightly coupled in the software (management plane, control plane), this increases the possibility of software failure spreading from one switch to another. In the event of this type of failure, the entire stacking system will fail, affecting all services accessed by the stacking system. Version upgrade business interruption time is long Because the stack itself is responsible for the service protection function, when the stack system is upgraded, traffic protection cannot be performed by another node when the member nodes of the VRRP are upgraded. The interrupt time is longer. In this regard, various vendors have developed two-frame RoundRobin and ISSU upgrade methods. These upgrade methods shorten the service interruption time during the upgrade, but do not solve the upgrade risks mentioned below, even because of technical complexity and software engineering complexity. The upgrade has amplified the risk of upgrade. Whole system upgrade risk The upgrade of the device software version, even with the most traditional and simple upgrade method, is a risky network operation. If the device fails to be upgraded, the service brought by the device will be invalid. In this case, all services including rollback should be used to restore the service as soon as possible. Due to the tight coupling between member switches, the two devices can be upgraded together. If the upgrade fails, all service networks in the stack will be interrupted. In the stacking system, the access layer often assumes the role of server dual-homed protection access, or is in the role of a high-reliability gateway. This means that the upgrade failure is likely to lead to the collapse of the entire service. Link-agg virtualization (M-LAG) Horizontal virtualization, from the perspective of requirements, to meet the cross-device redundancy of the access layer and the aggregation layer, and the cross-device redundancy of the aggregation layer L3 gateway. Is there any other technology that supports horizontal virtualization and what are the problems with stacking? The answer is of course that the M-LAG (MulTIchasis Link AggregaTIon Group) of Huawei CloudEngine series data center switches supports such virtualization technology. This technology implements Layer 2 virtualization only on the link-agg level of two devices. The management and control planes of the two member devices are independent. Note: Wikipedia refers to this technology as MC-LAG (MulTI-Chassis Link AggregaTIon Group), which CISCO calls vPC (Virtual Port-Channel). The following Wikipedia terminology is abbreviated as MC-LAG. MC-LAG, which supports cross-device link bundling networking and supports Dual-Active L3GW. On the access side, the MC-LAG is similar to the stack from the perspective of the peer device and the server. However, from the perspective of the three-layer network, the two member nodes of the MC-LAG have their own independent IP addresses, and the two nodes have their own independent management and control planes. From an architectural point of view, the two member devices of MC-LAG only have the coupling of the data plane and the lightweight coupling of the protocol plane: The architecture of MC-LAG determines that this technical solution does not have three problems that are difficult to solve by stacking: So, if you say so many benefits of MC-LAG, is there no fault? Of course not, the inch is long and the ruler is short. The last section compares the advantages and disadvantages of stacking and MC-LAG, as well as scenario selection recommendations. Stacking and MC-LAG comparison and selection recommendations According to the comparison table above, stacking and MC-LAG each have advantages and disadvantages. In general, for network design/maintenance personnel, stack wins are simple in management and maintenance, MC-LAG wins reliability and low upgrade risk. When designing a data center network solution, we need to weigh the considerations. There are the following strategies to choose from: Strategy 1: The aggregation layer prioritizes reliability and upgrade convenience, and selects M-LAG. Because the access layer has a large amount of equipment, priority is given to service deployment and maintenance convenience, and stacking is selected. Strategy 2: Prioritize reliability, upgrade low risk, and use M-LAG for aggregation and access. Strategy 3: Prioritize service deployment and maintenance convenience. Both aggregation and access use stacking. Pre-Terminated Mini Cable,Pre Terminated Double Sheath Cable,Pre-Terminated Cable For 5G Network,Pre Terminated Cable For Telecommunication ShenZhen JunJin Technology Co.,Ltd , https://www.jjtcl.com