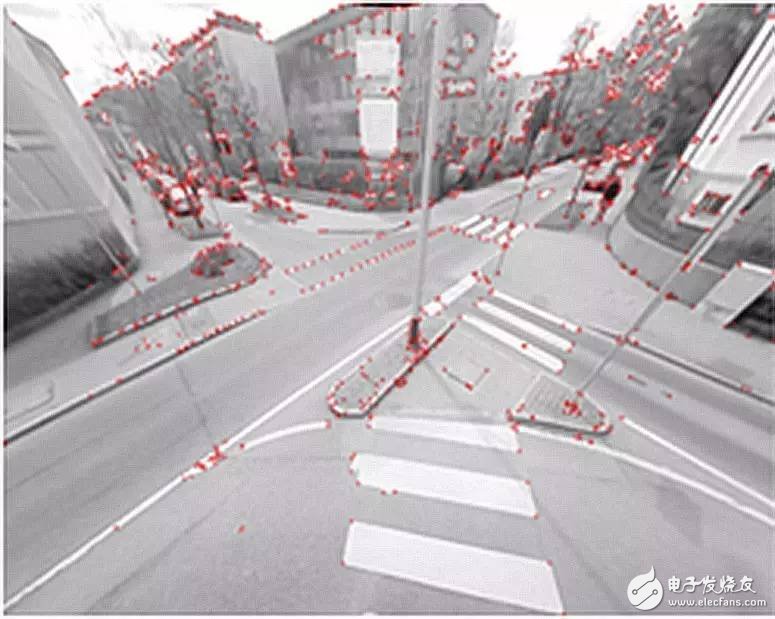

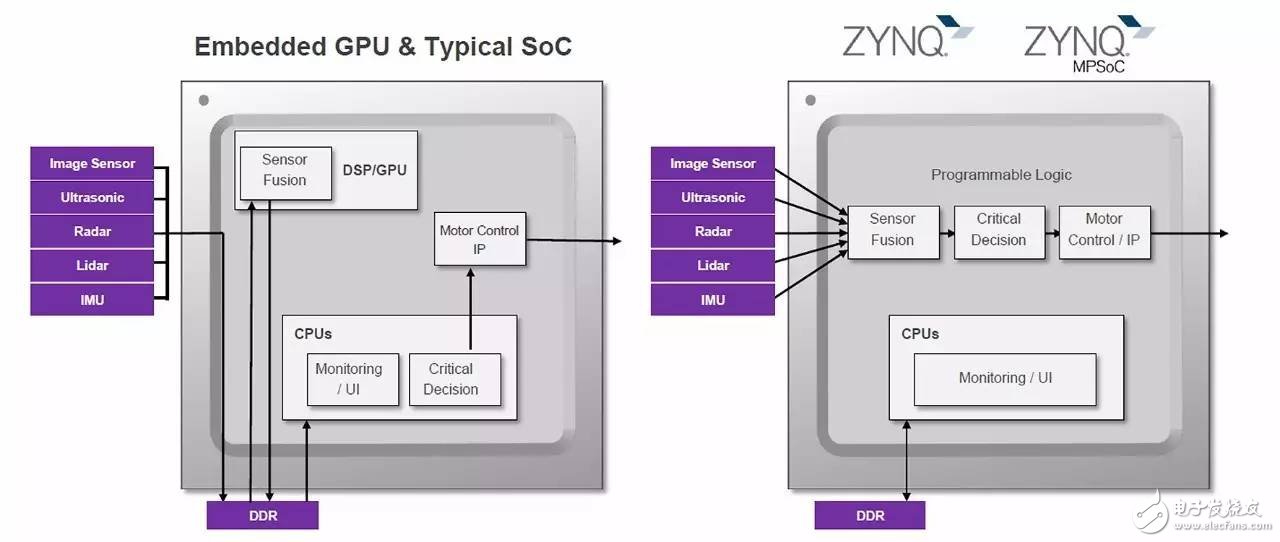

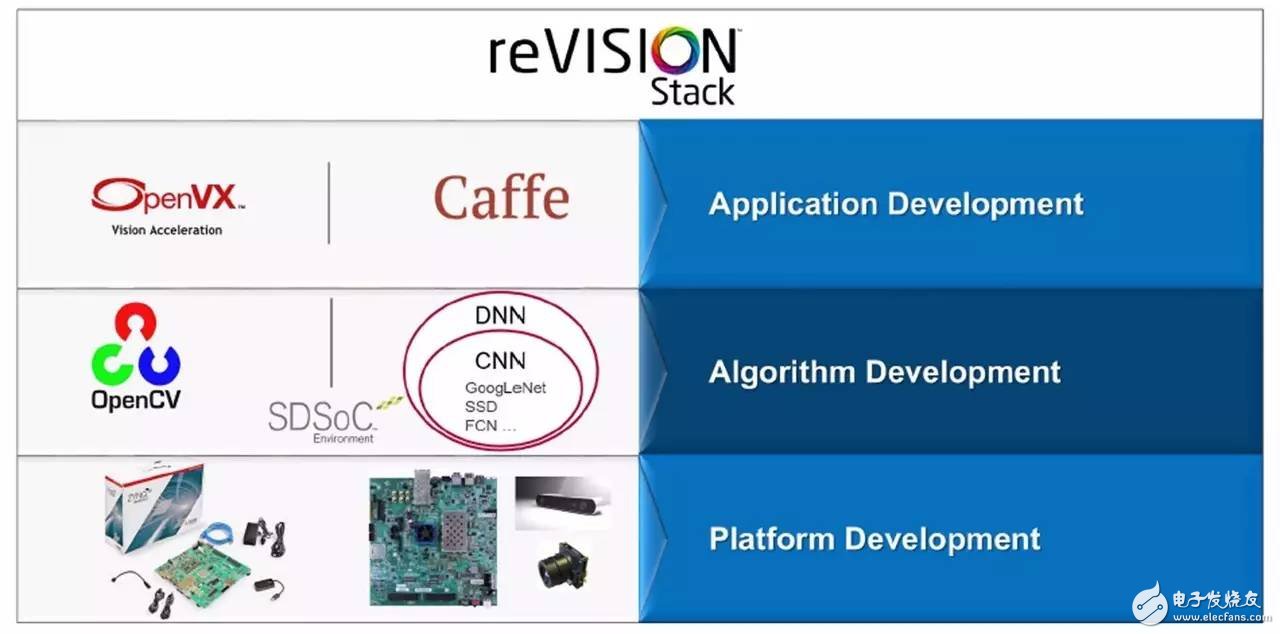

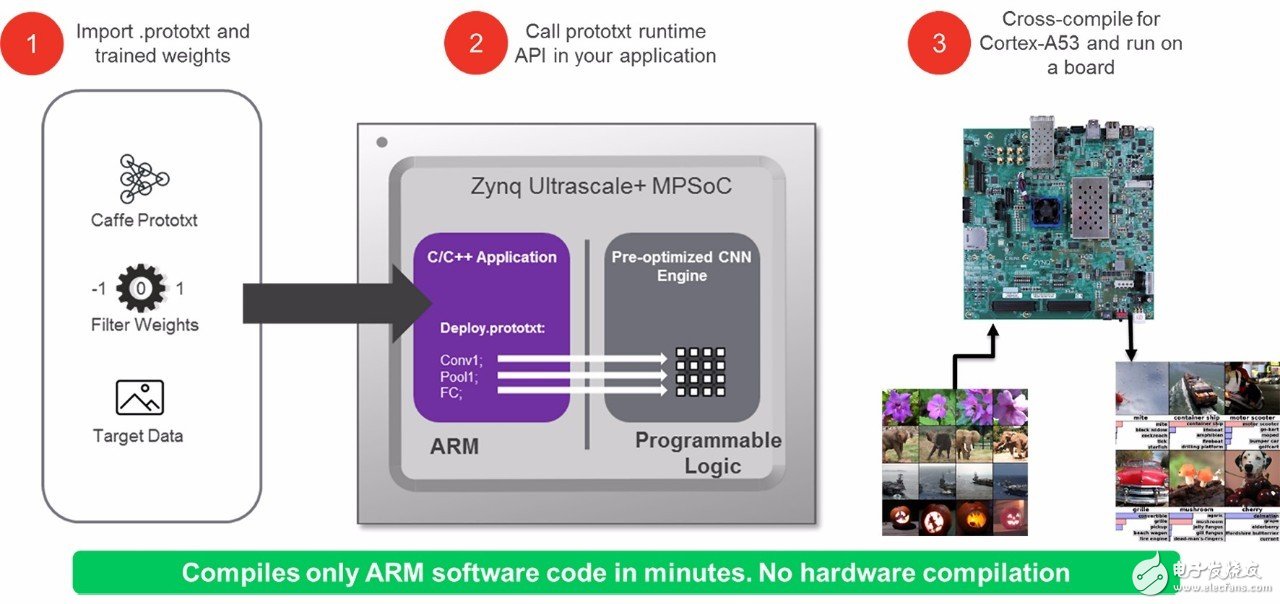

Author: Nick Ni and Adam Taylor Surveillance systems rely heavily on the capabilities provided by embedded vision systems to accelerate deployment across a wide range of markets and systems. These monitoring systems are used in a wide variety of applications, including event and traffic monitoring, security and security applications, ISR, and business intelligence. The diversity of uses also presents several challenges that require system designers to address them in the solution. They are: ◠Multi-camera vision – Ability to connect multiple homogeneous or heterogeneous sensor types. ◠Computer Vision Technology - Ability to develop using advanced libraries and frameworks such as OpenCV and OpenVX. ◠ Machine Learning Technology – Ability to implement a machine learning inference engine using a framework such as Caffe. ◠Increase resolution and frame rate – increase the data processing required for each image frame . Depending on the application, the monitoring system implements a corresponding algorithm (such as optical flow method) to detect motion within the image. Stereoscopic vision provides depth perception within an image, and machine learning techniques are also used to detect and classify objects in the image.   Figure 1 - Example application (top: face detection and classification, bottom: optical flow) Heterogeneous system devices, such as the All Programmable Zynq®-7000 and Zynq® Ultrascale+TM MPSoC, are increasingly being used in the development of surveillance systems. These devices are the perfect combination of a programmable logic (PL) architecture and a high performance ARM® core processor system (PS). The tight coupling of PL and PS makes the created system more responsive, reconfigurable, and more energy efficient than traditional solutions. Traditional CPU/GPU-based SoCs require system memory to transfer images from one processing stage to the next. This reduces determinism and increases power consumption and system response latency because multiple resources need to access the same memory, causing processing algorithm bottlenecks. This bottleneck is exacerbated by an increase in frame rate and image resolution. This bottleneck is broken when the solution is implemented with Zynq-7000 or Zynq UltraScale+ MPSoC devices. These devices allow designers to implement an image processing pipeline in the PL of the device. Create a true parallel image pipeline in the PL where the output of one stage is passed to the input of another stage. This results in a deterministic response time, reduced latency, and the best power-efficient solution. The use of PL for image processing pipelines also provides a wider interface capability than traditional CPU/GPU SoC solutions, which only have a fixed interface. The flexible nature of the PL IO interface allows for any connection and supports industry standard interfaces such as MIPI, Camera Link, and HDMI. This flexible feature also enables custom legacy interfaces and can be upgraded to support the latest interface standards. With PL, you can also have multiple cameras connected in parallel. However, the most critical is to implement the application algorithm and eliminate the need to rewrite all advanced algorithms in a hardware description language such as Verilog or VHDL. This is where the reVISIONTM stack comes in.  Figure 2 - Comparison of a traditional CPU/GPU solution with the Zynq-7000/Zynq UltraScale+ MPSoC reVISION stack  The reVISION stack enables developers to implement computer vision and machine learning techniques. Here, the same advanced frameworks and libraries for Zynq-7000 and Zynq UltraScale+ MPSoC are also available. To this end, reVISION combines the resources to support platform, application and algorithm development. The stack is divided into three different levels: Platform development - this is the bottom layer of the stack and is the basis for building the remaining stack layers. This layer provides a platform definition for the SDSoCTM tool. Algorithm Development – ​​This is the middle layer of the stack that provides support for the implementation of the required algorithms. This layer facilitates the transfer of image processing and machine learning inference engines to programmable logic. Application Development – ​​This is the highest level of the stack and provides industry standard framework support. This layer is used to develop applications to take advantage of platform development and algorithm development layers.  The algorithm and application layer of the stack support traditional image processing and machine learning processes. In the algorithm layer, image processing algorithms are developed using the OpenCV library. This includes the ability to accelerate multiple OpenCV features, including a subset of the OpenVX kernel, into programmable logic. To support machine learning, the algorithm development layer provides several predefined hardware functions that can be placed in the PL to implement the machine learning inference engine. These image processing algorithms and machine learning inference engines are then accessed by the application development layer to create the final application and support advanced frameworks such as OpenVX and Caffe.   Figure 3 - reVISION stack The reVISION stack provides all the necessary elements to implement the algorithms required for a high-performance monitoring system. Accelerate OpenCV in reVISION One of the most important advantages of the algorithm development layer is the ability to accelerate multiple OpenCV functions. In this layer, the accelerated OpenCV functionality is divided into four advanced categories. Calculations – Functions include: absolute deviation of two frames, pixel operations (addition, subtraction, and multiplication), gradients, and integral operations. Input Processing – Supports bit depth conversion, channel operations, histogram equalization, remapping, and resizing. Filtering - Supports multiple filters, including Sobel, custom convolution, and Gaussian filters. Other – offers a variety of features including Canny/Fast/Harris edge detection, thresholds, and SVM and HoG classifiers. These features form the core functionality of the OpenVX subset and are tightly integrated with OpenVX and the application development layer. The development team can use these features to create algorithmic pipelines in programmable logic. Implementing these functions in logic in this way can significantly improve algorithm implementation performance. Machine learning in reVISION reVISION provides integration with Caffe to enable a machine learning inference engine. Integration with Caffe takes place at the algorithm development layer and the application development layer. The Caffe framework provides developers with a large library of functions, models, and pre-training weights in the C++ library, as well as PythonTM and MATLAB® bundles. This framework enables users to create and train networks to perform the required operations without having to start over. To facilitate model reuse, Caffe users can share models through the model zoo; multiple network models are available in the library, and users can implement and update network models for specialized tasks. These networks and weights are defined in the prototxt file, which is used to define the inference engine when deployed in a machine learning environment. reVISION provides Caffe integration, making the implementation of the machine learning inference engine very simple, just provide a prototxt file; the rest is done by the framework. Then, use this prototxt file to configure the processing system and the hardware optimization library in the programmable logic. Programmable logic is used to implement the inference engine and includes functions such as Conv, ReLu, and Pooling. Figure 4 - Caffe Process Integration The digital representation in the machine learning inference engine also plays an important role in performance. Machine learning is increasingly using more efficient, less accurate fixed-point digital systems, such as the INT8 representation. Compared to the traditional floating point 32 (FP32) method, the fixed point precision digital system does not cause a large loss of precision. Fixed-point operations are easier to implement than floating-point operations, so switching to INT8 enables a more efficient and faster solution. Programmable logic solutions are best suited for fixed-point numbers, and reVISION can use INT8 expressions in PL. With the INT8 representation, a dedicated DSP block can be used in the PL. With the architecture of these DSP blocks, when using the same kernel weights, two INT8 multiply accumulation operations can be performed simultaneously. This not only achieves a high-performance implementation, but also reduces power consumption. With the flexible nature of programmable logic, it is also easy to achieve fixed-point digital representations with lower precision. in conclusion  reVISION enables developers to take advantage of the features offered by the Zynq-7000 and Zynq UltraScale+ MPSoC devices. Moreover, even an expert can use programmable logic to implement the algorithm. These algorithms and machine learning applications can be implemented through an advanced industry standard framework to reduce system development time. This enables developers to deliver systems that are more responsive and reconfigurable and more power efficient.

Bluetooth Mini Projector

Sound can be transmitted wirelessly, no audio source cable is required.

wifi bluetooth projector,bluetooth home projector,bluetooth protable home projector Shenzhen Happybate Trading Co.,LTD , https://www.szhappybateprojector.com

2. After the projector is connected to the mobile phone through the Bluetooth function, the projector acts as a speaker and can play music from the mobile phone.