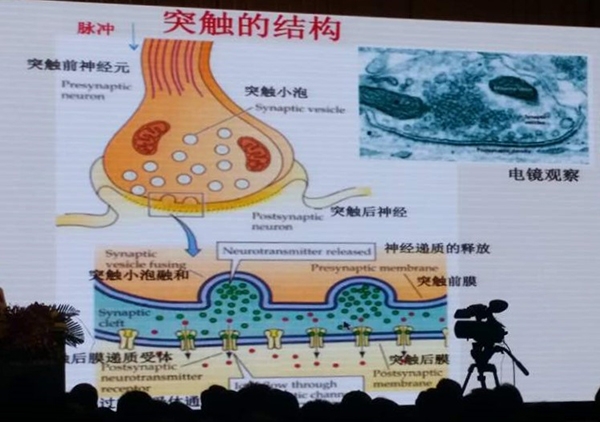

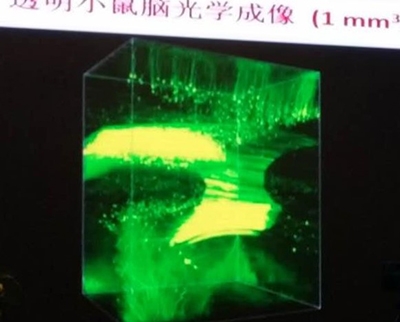

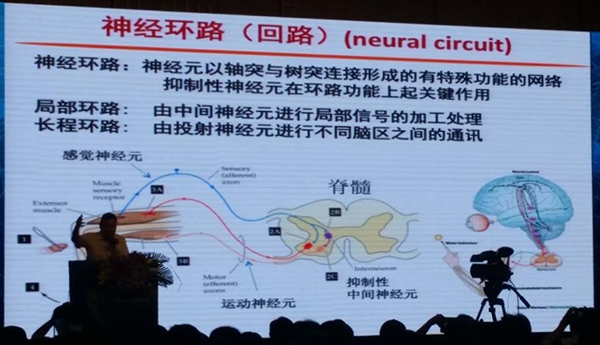

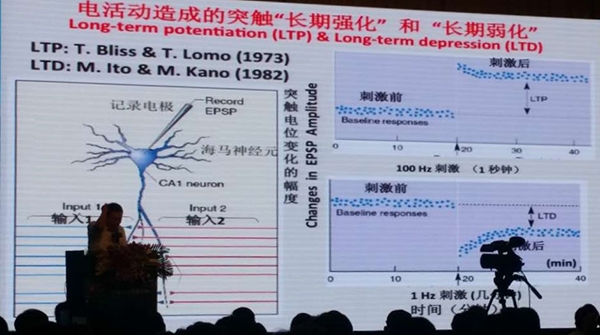

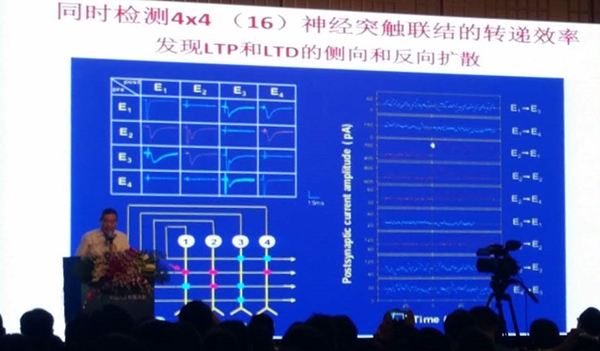

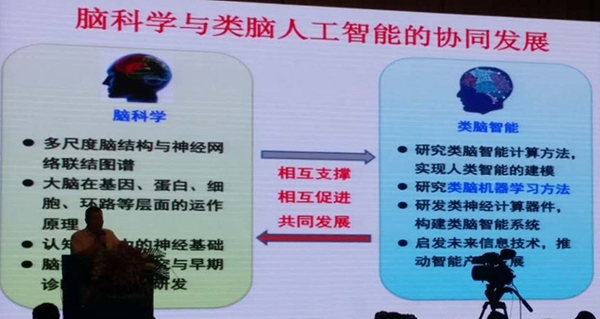

We have said that there is a school in the artificial intelligence research that tends to think that proper simulation of the brain is the key to artificial intelligence creation. However, in fact, we still understand the working principle of the human brain until today. Not to mention the accurate simulation of it. The study of the brain and the research on artificial intelligence based on it have long been separated from the categories that individual disciplines can cope with, and they require collaboration between multiple disciplines. Perhaps only the top talents can understand and eventually promote progress in these areas. However, even our current understanding of the very shallow study of the human brain has constituted an extremely beautiful picture. The mysteries contained in this do not give us any doubt that our brain is the most subtle (and naturally evolved) structure in the world. And these studies also tell us that we have too much too much potential, whether it is for human intelligence or artificial intelligence. If conditions are ripe, brain science and computer science can be more closely integrated, no one can imagine how much energy will erupt. At the just-concluded China Artificial Intelligence Conference CCAI2016, Academician Wu Muming, a foreign academician from the Chinese Academy of Sciences and the director of the Institute of Neurology at the Chinese Academy of Sciences, explained to us in detail the latest developments in the field of neuroscience. The rigorous ideas and experimental methods embodied in his speech can not but be praised, Lei Feng network in this fine-tuned the entire lecture record, and made an annotation for everyone, while everyone reading, Imagine that you read At the same time, what kind of sparks are there between your nerve cells in the magical brain? Speaker: Pu Muming I am very glad to have the opportunity to attend this meeting today. Today I would like to report some progress in neuroscience and some of my personal opinions to everyone, hoping to help future development of artificial intelligence. The overall structure of the brain First of all, from the simplest point of view, there are two major categories of the nervous system. The first is the central nervous system, including the brain, cerebellum, brainstem, and spinal cord. The other is the peripheral nervous system. There are also various organs of the visceral autonomic nervous system. What we call brain science is mainly focused on the brain. Therefore, brain science is part of neuroscience. The most important part of the brain is the cerebral cortex, which is the most advanced part of our humanity. Many structures under the brain, called the subcortical structure, emerged relatively early, but evolved from monkeys to orangutans. People, the structure of this cortex has been greatly increased, which is the main source of human cognition. The main concern of brain science is the various functions of the cortex. Now we already know that various parts of the cerebral cortex are in charge of various functions. That is, the function is partitioned. Which of your areas is damaged will lose corresponding brain functions. For example, if the language area is damaged, we cannot speak and the visual cortex will be invisible. We have confirmed this experiment many times and we have formed a recognized recognition that we can observe the activity of the brain through positron imaging. -Inject glucose into the brain of normal people, where there will be signals where activity is generated. We observe in the experiment that when the subject sees text or something else, it always has an activity in the back of the brain, we Basically, we can determine that this area is the area where the supervisor is visual. We let him speak a few words and find another local activity. This place is basically a language area. But we observed a very surprising phenomenon. We let him do nothing, light imagine a few words, then we will find that the entire cerebral cortex is full of activity, which shows that although I imagine a few words seem to be very Simple things, but in fact involve many parts of the brain, in the end why so we still haven't figured out so far, so we said that thinking about how to explain in the brain science, we still do not know. Lei Feng Network Note: Positron lithography is one of the most widely used methods in brain imaging technology. By injecting glucose that contains trace amounts of radioactive elements that do not affect human health, we can use an instrument to detect radiation emitted from outside the brain. When the brain is working, it consumes energy and absorbs glucose. This means that at this time we only need to observe which area of ​​the radiation signal is weakening, and we can judge that the area is working. The fact that “imagining†would call most of the brain's neural structure fits the hypothesis that the potential power of the human brain is actually very powerful, but humans cannot actually perform calculations with the most efficient means when performing mental calculations because people The brain does not have that ability. The human brain will first convert the formulas into abstract concepts in the brain. This calls for the functions of the visual hub. In the simulation calculation, we must also call many advanced functions. Even when calculating the more complex formulas, we consider abdication, Carry, also need to call the memory function. It is this kind of "inefficient" calculation method that causes the brain's computing ability to completely fail to match the computer. Whether in brain experiments on fish or animals, or on human brain experiments, we have found that even if they do nothing, the brain has many spontaneous activities. What is the meaning, we still do not know. This is a big problem currently facing the study of the nervous system. Neurons and Synapses For further study, we sliced ​​the nervous system. We found that the nervous system is densely packed with nerve cells. If we stain only a few cells, we will find that at the junction of color, they actually have many reticular structures. These reticular structures are called neural networks. The function of the nervous system is It is by these things. There are hundreds of billions of nerve cells in the human brain. We call them neurons. By interconnecting them, we get a complex neural network consisting of hundreds of billions of connections for perception, Movement, thinking and other functions, neurons are structural cells, there are input and output, its output is called axon, the input is called dendritic (Lei Feng network chaos into: In fact, this part of a lot of things high school biology are taught Over and over, blazing. Here we demonstrate an experiment performed by a Stanford University laboratory. Through a specific method of fluorescent staining, we found that there is a regular and very complex network inside the mouse brain. In this picture, the structure above the cortex is relatively neat, The following area is another style. What we have just seen is called the mesoscopic level (Note: This is a scale between macroscopic and microscopic). At this level we can best explore the different effects of different types of nerve cells. The macro is too large, and the microscopic view makes the relationship between them unclear. Drawing the structure of the mesoscopic level is only the first step. We need to understand the functions of this, and we need to understand their functional information processing and functional mechanisms. In the past many years, we have had a clearer answer to this question, and a series of Nobel Prizes has been born in this process: the information transmitted between cells depends on the pulse, and the information contained therein is the frequency of the pulses. And the timing is determined, but not with the amplitude. Pulses move within the cell, and when communication is required between cells, the information-transmitting cells release vesicles from the axons. The ionic flow through these vesicles creates a change in potential that propagates outside the membrane. This process is very complicated on the dendrites of the next receiving cell. We call it chemical synaptic transmission. Since the process goes through extracellular, this process can be regulated. We can promote or suppress this signal through external means and thus intervene The process and results of the transfer. Lei Feng Network Note: At present, even a well-established artificial neural network with more than 200 layers is already worthy of a book, and the neurons in the brain do not know how many layers they can divide into, of course, of course. Although artificial neural networks are inspired by human neural networks, their working principles are not very similar. Good creature partners should remember (and of course don’t discourage XD even if you forget). When learning neurons in high school biology books, teachers should take narcotics as an example. The principle of anesthetics is to block nerve cells through drugs. Interional ion channels make the signals that represent pain sensations very small, and even unable to be transmitted to our brains, thereby achieving the goal of temporarily eliminating pain sensations. This is a typical case of this mode of transmission. In addition, how much chemical substance is released at each synapse and how much chemical substance is accepted can be changed, which makes synapses very malleable. This synaptic plasticity is a very important key to information processing in the nervous system, and it is also the key to cognitive learning. The electric signal generated at the synapse by chemical substances is called synaptic potential, and the excitatory synaptic potential is The membrane potential after depolarization is depolarized, and if the degree of depolarization exceeds a pre-determined value, the pulse is issued, that is, the signal that the nerve sends out. However, some substances become hyperpolarized, which means that the higher the membrane potential becomes, the opposite effect occurs. A neuron receives hundreds or even thousands of inputs. After we call the superimposition of EPSP and IPSP inputs, we decide whether or not the threshold exceeds the threshold. If the threshold is exceeded, the signal is integrated and transmitted to the next neuron. This is the principle of information transfer. Loop, network, neural activity Next we will be more complicated structure: Loop. We can understand this way: The overall interconnection of neural networks is called the network, and there are many paths of special functions between networks. These paths are called loops. In other words, the neural circuit means that there is a network of special functions on the neurons, which is also formed by axons and dendrites. In this network, inhibitory neurons play a key role. Many times we can accomplish some behavioral activities through the joint action of promotion and inhibition. This neural plasticity mentioned earlier, I personally think is the most important understanding of the brain in the past 50 years, because this understanding indirectly validates a hypothesis: proposed by Canadian psychologist Donald Olding Hebb 60 years ago. This hypothesis: If two cells undergo synchronous electrical activity multiple times, their synaptic connections will be strengthened or stabilized. If asynchronous electrical activity occurs, these synapses will weaken or even disappear. It is called Haibu learning law. It can be understood that if the brain is often stimulated by the same thing (the same electrical signal is generated between neurons), the brain will become more and more sensitive to this thing. This argument has been experimentally proven in the 1970s and 1980s: Electrical activity can lead to long-term enhancement of synapses or long-term weakening. If you stimulate the high-frequency stimulus synapse for a second, the sudden contact point after stimulation will rise before the stimulus, and this rise can last for a long time. If you use low-frequency stimulus, the result will be the opposite. Efficiency will decline and become weaker. Recently, some laboratories have discovered that mice live in the process of learning, resulting in new synapses between neurons, and later observed that the production of this new synapse also appears in the brains of adult individuals, but The frequency of occurrence will be much less than during development. This hypothesis of Haibu is remarkable, and the plasticity of neurons also supports his hypothesis. This hypothesis can be further reasoned, and it can also form a hypothesis of the principle of perceptual memory formation. After the perceptual information is transmitted to the brain, the connection between a group of neurons is strengthened, and these reinforced connections are actually memories. The cells that are stimulated and enhanced by the sensed information are called Haibu cell clusters. After that, because of the strong correlation between them, as long as you can stimulate it through a part of the information, you can use this information to make The entire cell population restarts its activities. So as to extract all memory. Lei Feng Network (search "Lei Feng Net" public concern) Note: We should have had this kind of experience: If we take the initiative to recall what we remember, we often think of something that is very small, maybe something you had eaten at noon three days ago, you can't think of it at all, but If you think of it one day, or if there is an additional source of information telling you that the meal that took place that day was with a good friend who hasn’t seen you for a long time, you may have eaten at noon that day and even other messy details in the process. Think of it all together. This phenomenon is in good agreement with the content of this hypothesis. The hypothesis of Haibu has a turning point. Just like the development of artificial intelligence, there is a turning point every time. The original hypothesis in Haibu's hypothesis is that what is called synchronization, what is the order of discharge between two neurons, and what is the time? This hypothesis has not been described, so many experiments later showed that Hybl's hypothesis should be revised to a time-series hypothesis that highlights the sequence of the neurons that preceded the prominence, that is, if the presynaptic activity is greater than the post-synaptic activity. If it is afterwards, there is weakening of electricity activities. The formation of the network during the development process is a very important thing. We observed that many human mammals live after birth and there is no network, neurons have, but the network between them is very small, most of them The network is established after birth. The process of learning is a process in which the network becomes a complicated and effective network. During the development period, the formation of new synapses is very frequent, and the complexity of the network increases very quickly. This process will still occur after adulthood, but it will reduce a lot. Because most locations have changed, there is little room for new adjustments and new synapses. The artificial intelligence is finally mentioned below. The current popular deep learning is actually a very good application based on artificial neural networks, and these artificial neural networks are inspired by some laws of neuroscience. Improve the parameters in the network with result feedback. The end result is very good, but how to adjust the network, artificial neural network and human neural network is not the same, you have to change the efficiency of the input through the output, the transfer of information is from the input to the output direction, how do you put The output signal goes back? In the human nerve, obviously, this is not done by mathematical algorithms. The nervous system does not have this mechanism. Lei Feng Network Note: Because the artificial neural network usually adjusts the nodes directly by calculating the difference between the expected result and the actual result and the node weight, the structure of the synapse determines that the signal transmission in the human neural network can only be Unidirectional, how does the output signal feed back to the input? Pomu Ming and his team did an experiment We have cultivated a group of interconnected cells and recorded four of them. There are 16 connections between them. However, at the very beginning, only 16 of the 16 connection points actually had electrical activity. We wanted to change them. The strength of a connection point, to see if it will affect other connection points, for example, we have enhanced the connection E1 to E2, and the synapse potential enhancement has occurred, but we found that in addition to the first 9 connections, there are 4 Other connections have also been enhanced. This connection enhancement also causes other connections to increase. This proves that there is indeed a proliferation of changes in the synaptic potential (the output signal is passed to the input in some way) and we proceed with the regularity of this phenomenon. In the study, I wrote an article very happily. This article was published in Nature magazine in 1997 and titled Propagation of activity dependent synaptic depression in simple neural networks. This article created two records. The first one is Nature. The long text, which has 10 pages in length, is the article that has the lowest citation rate because no one knew at the time. Why do we have to do this experiment, neuroscience people see this he does not know what use this result, artificial intelligence scholars do not read bioscience articles, so his citation rate is the lowest, but this year is now slowly It began to rise. I hope everyone will pay attention to this. We spent 10 years trying to prove that the synapse has been strengthened, and the reverse and lateral propagation of inhibition have existed. I think this phenomenon is quite interesting, is it not that we are in artificial intelligence? Can this mechanism be applied? Mutual reference between artificial intelligence and brain neuroscience I summarise just these contents, and here are five features that artificial intelligence may draw from human neural networks: The first feature is that our current artificial neural network has no different types of nodes. All of our units are the same, but the key to the nervous system is the inhibitory neuron. There are no inhibitory neurons that cannot function. Inhibitory and excitatory neurons also have subtypes, not that each unit is of the same nature, some units have an output response to high-frequency signals, have no response to low frequencies, and subtypes have various signal transmission characteristics. This is a feature that artificial intelligence can consider adding in the future. The second point is that there is a forward direction in the neural network. Now it is a forward network, but there are also backward networks and lateral networks. These can be inhibitory or excitatory connections. Three kinds of networks, one is the feedback network, after the neurons are activated, their axons output to the suppressing neurons next to suppress their own, so he has control, not unlimited high, he can suppress high-amplitude electricity Activity, the second is Feed-forward, in addition to the input of excitatory neurons, the same input to the side of inhibitory neurons, inhibition of neurons by delaying this signal, as well as flanking, sharpening a series of information in the nervous system Because there is an inhibitory delay, there is information at the beginning, and after a series of messages are erased at the back, so that the time becomes more accurate, and the stimulated neurons in the middle can be strengthened. Falling, this way can highlight the fortified pathway and press down the pathway that should not be strengthened. These are all caused by inhibitory neurons. The third most important feature that can be used for reference is synaptic plasticity, functional plasticity, which is the enhancement of efficiency or reduction of LTP (long-term synaptic enhancement) and LTD (long-term suppression of synapses), which is the most common What we need to pay attention to is the law of reinforcement and attenuation. We can rely on the frequency of pre- and post-synaptic electrical activity to determine LTP or LTD, but we can also rely on timing, which is the synaptic presynaptic firing sequence. These can be thought of how to do similar applications in artificial neural networks. Another is structural plasticity, that is, this synapse is newly created and trimmed during development. This connection can be changed. The neural connection of the artificial neural network cannot be changed, but only changes synaptic. Weights. In the laboratory of the Institute of Computing, we have added this connection variability to the results and found that it has a good effect. Another is the spread of plasticity. The propagation of LTD is the source of our BP, but why LTP cannot be transmitted? Why must be specified, can have its own reverse propagation, lateral propagation, and can be spontaneous, do not need ordered neurons of their own order to carry out changes in synapses. The fourth category, memory storage, extraction and regression, according to our hypothesis, memory is how it happens, that is, synaptic groups in the sense of memory or other memory activation between the connection enhancement or structure pruning, is to deal with The storage on the network, his modified memory in the nervous system will fade over time, LTP, LTD is not a long-term, if you come once, then disappeared after a few minutes, this makes sense, because not all The synapses can be strengthened and weakened. In this case, you do not get meaningful information. Therefore, he has forgotten that this long-term memory of short-term memory can be transformed by the same network. Short-term memory can be dissipated. The repetitive and meaningful information of the rules. He can translate the strengthening and weakening between the synapses between the networks into long-term changes and structural changes. This is the mechanism of the nervous system. What is the memory storage is in this network? By making part of the synaptic group's activities online, this memory can be extracted. In reinforcement learning in artificial intelligence, it is now an algorithm to perform this reinforcement, but in fact we can consider adding a special neural unit to do this function. The last is the theory of Haib and the most interesting concept of a cluster. The concept of the neuron cluster in Haibu is nested clusters. It can be used to make memories of images and to form concepts. These are nested clusters. After joining STDP, it can become a sequence, including the storage of language information is also nested, that is, the cluster generated when the sentence appears. This cluster of words is not the same as the cluster of another sentence, the same word and a statement, Different sentences have different meanings because they are connected in different clusters. So the cluster can put multi-component and multi-modal information together. Visual, auditory, and olfactory can all be connected together. The modal information is processed in different areas of the network. In the natural network of the neural network, how do these information bundle different modal information? He uses synchronous activities to oscillate in different areas at the same time, or there is a difference between the oscillations. After this, it is also synchronous to bundle various clusters and input information, which is a very important map in the nervous system. The system is not chaotic input, but it has a map. Some of the map structures have already been used in artificial networks. The collaborative development of brain science and brain-like artificial intelligence is also our future prospects. It emphasizes mutual support, mutual promotion, and common development. I see the emergence of artificial intelligence BP neural network, let me wonder if there is natural in natural networks. The resulting BP algorithm that does not require instructions finds this phenomenon. Now that the phenomenon has been discovered, it has not been applied back to the artificial neural network. So I said that if you feed back to the artificial neural network, you should also find this feature useful. What I want to say is that we should be the best example of combining brain science with artificial intelligence. Brain science can be applied to artificial neural networks and vice versa . In order to effectively combine these two fields, the Chinese Academy of Sciences Last year, we established the Center for Excellence in Innovation in Brain Science and Intelligent Technology. This center is different from the general center. We really work together. We believe that in the future, the progress of artificial intelligence and brain intelligence in collaborative development will be the best in that country. Most promising, I hope everyone will care about the development of the mental and intellectual centers in the future. I’ll talk about it today, thank you. Too many inventions of mankind begin with admiring the ability of animals to possess, starting from the development of bionic structures to find the final product form suitable for oneself. If we follow this path to understand, artificial intelligence cannot be understood as human envy of all animals, and finally begins to want to imitate one of their most difficult abilities—smart. Many of the previous inventions, apart from meeting human desires, It has brought many added value to humanity that has not been thought of (this is not a very appropriate example: when an aircraft first appeared, many senior military officers who were well-armed believed that it did not play any role in the war.) The emergence of intelligence may also give us added value that we cannot imagine now. And, rather than saying that we will be replaced by artificial intelligence, the greater possibility may be that, like the current brain science and computer science close cooperation, we will form a kind of complementarity with artificial intelligence, or even symbiotic? Can we see this happening in our lifetime? Let us wait and see. Topics from nipic.com Static var generator cabinet takes DSP as the core, connects the self-changing bridge circuit in parallel with the power grid through the reactor, adopts the real-time data acquisition technology and dynamic tracking technology to detect the grid voltage and current information, and calculates the grid voltage and current through the internal DSP calculation. After that, the reactive power component of the load current is extracted, and then the PWM signal is driven to drive the IGBT, and the three-level topology circuit is controlled according to the reactive current to generate a reactive current that meets the requirements, so as to achieve the purpose of reactive power compensation. Static Var Generator Cabinet,Power Factor Correction Cabinet,Pfc Free Stand Cabinet,Static Var Generator Svg Cabinet Jiangsu Sfere Electric Co., Ltd , https://www.elecnova-global.com

CCAI | Will AI be buried in the wonderful electrical signals between the synapses of neurons in the brain?

Foreword