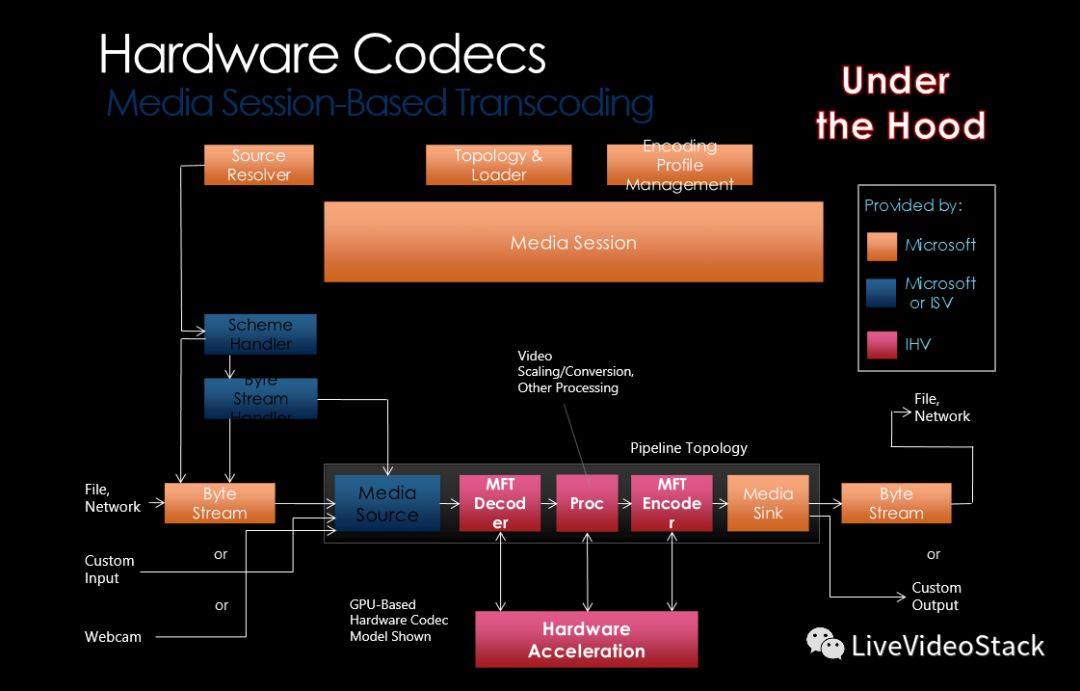

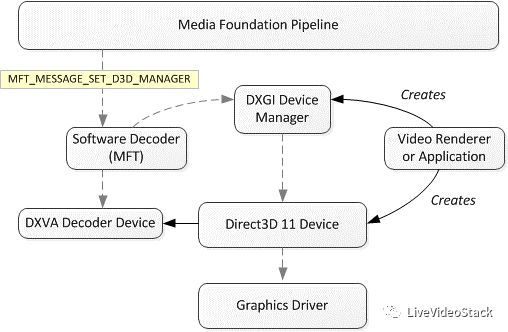

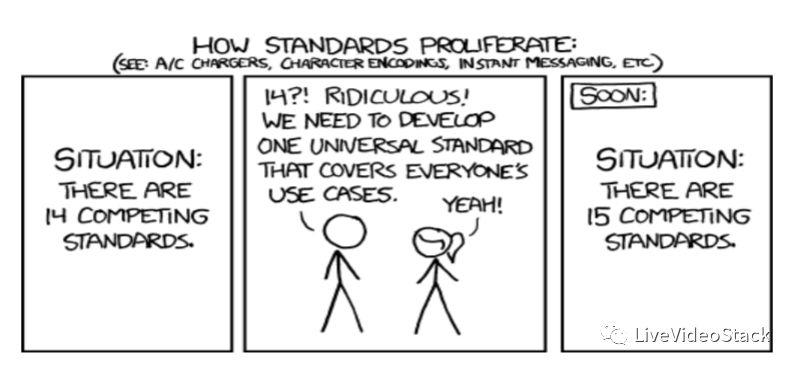

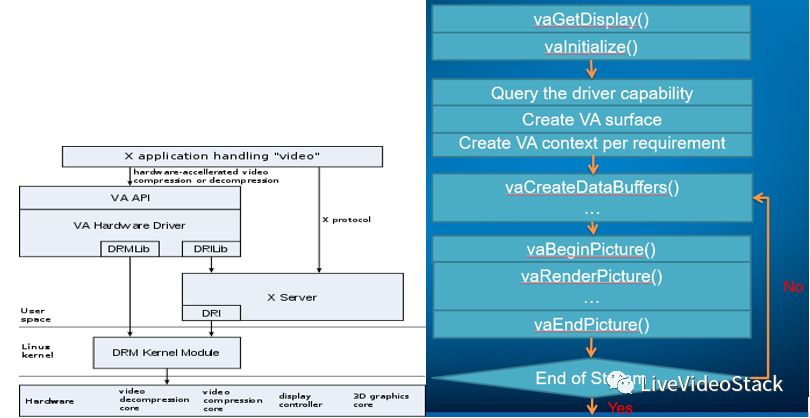

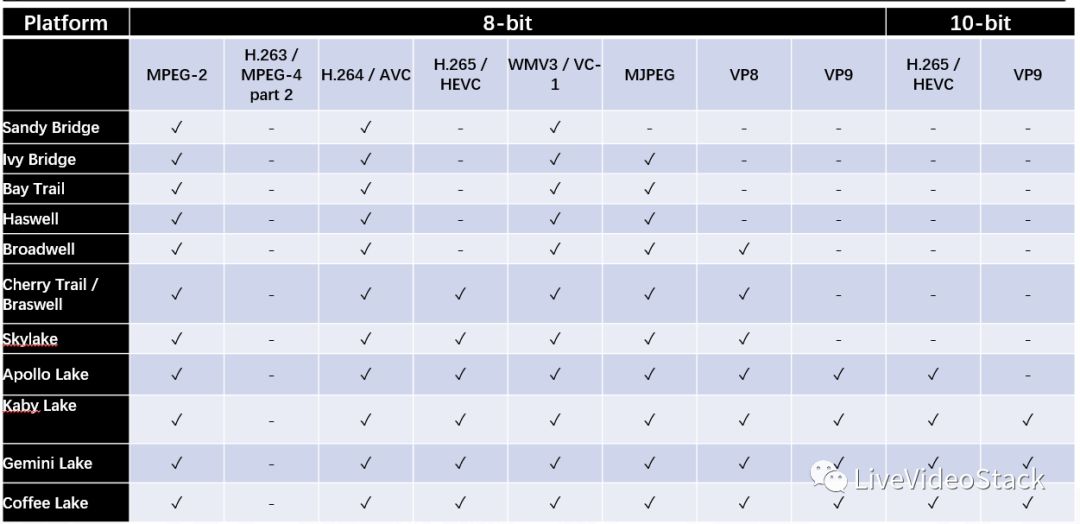

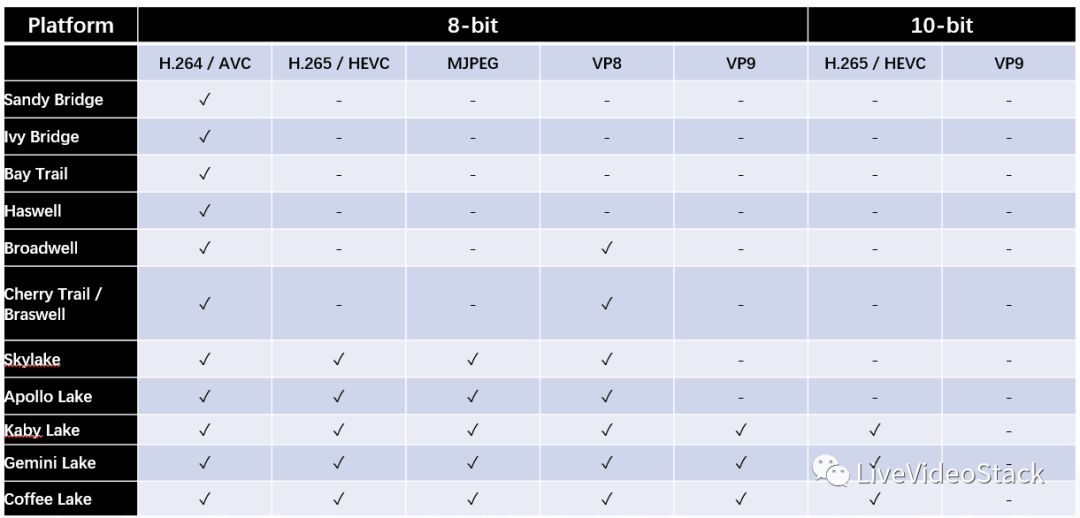

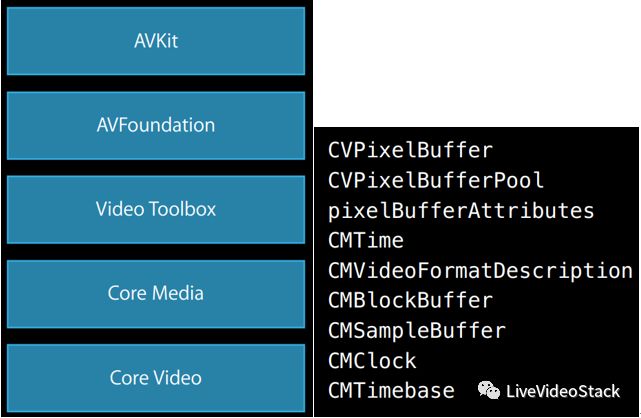

Multimedia applications are typically resource-intensive applications, so optimizing multimedia applications is critical, and this is the original intention of using video processing-specific hardware acceleration. In return, this allows the entire system to run more efficiently (for optimal performance). But in order to support hardware acceleration, software developers face various challenges: one is the potential system performance risk problem; in addition, software developers have been struggling because of the complexity of various hardware architectures, and need Maintain different code paths to support different architectures and different scenarios. Optimizing this type of code is time consuming and labor intensive. Think about you may need to face different operating systems, such as Linux, Windows, macOS, Android, iOS, ChromeOS; you need to face different hardware vendors, such as Intel, NVIDIA, AMD, ARM, TI, Broadcom..., therefore, Providing a versatile and complete cross-platform, multimedia hardware acceleration solution across hardware vendors is worth a lot. Dedicated video acceleration hardware allows operations such as decoding, encoding, or filtering to complete faster and use fewer other resources (especially CPUs), but there may be additional restrictions that are common when using only software CODECs. does not exist. On the PC platform, video hardware is typically integrated into the GPU (from AMD, Intel or NVIDIA), while on mobile SoC-type platforms, it is usually a standalone IP core (there are many different vendors). Hardware decoders typically generate the same output as a software decoder, but use less Power and CPU to complete the decoding. In addition, the features supported by various hardwares are also different. For complex CODECs with many different profiles, hardware decoders rarely implement full functionality (for example, for H.264, hardware decoders often only support 8bit YUV 4:2:0). A common feature of many hardware decoders is the ability to output a hardware surface that can be further used by other components (when using a discrete graphics card, this means that the hardware surface is in the GPU's memory, not the system memory), for playback (Playback) The scene avoids the Copy operation before rendering the output; in some cases, it can also be used with encoders that support hardware Surface input to avoid any copy operations in transcode situations. In addition, the output of the hardware encoder is generally considered to be worse than that of a good software encoder such as x264, but the speed is usually faster and does not take up too much CPU resources. That is, they need a higher bit rate to output the same visual perceptual quality, or they output at a lower bita perceived quality at the same bit rate. Systems with decoding and/or encoding capabilities may also provide other related filtering functions, such as common scaling and deinterlace; other post processing functions may depend on the system. The hardware acceleration scheme supported by FFmpeg is roughly classified into three categories according to the standards defined by various OS vendors and Chip vendors and industry alliances. Among them, OS involves Windows, Linux, macOS, Android; the specific solution of Chip vendors involves Intel. , AMD, Nvidia, etc.; while industry standards focus on OpenMAX and OpenCL; this is just a rough classification, many times, these people are criss-crossed, the relationship is complicated, the relationship between them is not as clear as the listed three categories This also confirms the complexity of the hardware acceleration scheme from the other side. As with most of the things we are familiar with, various APIs or solutions are constantly evolving at the same time, they also bear the history of the past, and later analysis can also more or less glimpse the traces of its changes. 1. OS-based hardware acceleration scheme Windows: Direct3D 9 DXVA2 /Direct3D 11 Video API/DirectShow /Media Foundation Most multimedia applications for Windows are based on the Microsoft DirectShow or Microsoft Media Foundation (MF) framework APIs, which are used to support the processing of media files; the Microsoft DirectShow Plug in and Microsoft Foundation Transforms (MFT) are integrated. Microsoft DirectX Video Acceleration (DXVA) 2.0 allows you to call the standard DXVA 2.0 interface to directly manipulate the GPU to offload video workloads. DirectX Video Acceleration (DXVA) is an API and requires a corresponding DDI implementation that is used for hardware accelerated video processing. Software CODECs and software video processors can use DXVA to offload certain CPU-intensive operations to the GPU. For example, a software decoder can offload an inverse discrete cosine transform (iDCT) to a GPU. In DXVA, some decoding operations are implemented by graphics hardware drivers, which are called accelerators; other decoding operations are implemented by user mode applications, called host decoders or software decoders. Typically, accelerators use the GPU to speed up certain operations. Whenever the accelerator performs a decoding operation, the host decoder must pass an accelerator buffer containing the information needed to perform the operation to the accelerator buffer. The DXVA 2 API requires Windows Vista or higher. For backward compatibility, Windows Vista still supports the DXVA 1 API (Windows provides a simulation layer that converts between API and DDI versions; in addition, since the value of DAVX 1 is now backward compatible, we Skip it, the DXVA in the article, in most cases, refers to DAVA2). In order to use the DXVA function, you can only choose to use DirectShow or Media Foundation as needed. In addition, it should be noted that DXVA/DXVA2/DXVA-HD only defines decoding acceleration, post-processing acceleration, and does not define encoding acceleration. To speed up coding from the Windows level, you can only choose the encoding acceleration of Media Foundation or specific Chip vendors. Now, FFmpeg only supports DXVA2 hardware accelerated decoding, DXVA-HD accelerated post processing and Media Foundation hardware accelerated encoding are not supported (in the DirectShow era, encoding support on Windows requires FSDK). The following figure shows Pipeline with hardware accelerated transcoding under the Media Foundation media framework: Note that due to the evolution of Microsoft's multimedia framework, there are actually two interfaces to support hardware acceleration: Direct3D 9 DXVA2 and Direct3D 11 Video API; the former should use the IDirect3DDeviceManager9 interface as the acceleration device handle, while the latter uses ID3D11Device interface. For the interface of Direct3D 9 DXVA2, the basic decoding steps are as follows: Open a handle to the Direct3D 9 device. Find a DXVA decoder configuration. Allocate uncompressed Buffers. Decode frames. For the Direct3D 11 Video API interface, the basic decoding steps are as follows: Open a handle to the Direct3D 11 device. Find a decoder configuration. Allocate uncompressed buffers. Decode frames. On the Microsoft website, the above two situations are well described, the reference link is: https://msdn.microsoft.com/en-us/library/windows/desktop/cc307941(v=vs.85).aspx . As you can see from the above, in fact, FFmpeg is based on hardware acceleration on Windows, only the decoding part, and only uses the Media Foundation media framework, but only supports two device binding interfaces, namely Direct3D 9 DXVA2 and Direct3D 11 Video. API. Linux: VDPAU/VAAPI/V4L2 M2M The hardware acceleration interface on Linux has undergone a long process of evolution, and it is also the struggle of various forces. The following comics show the evolution of interfaces and the power of various forces. The end result is the coexistence of VDPAU (https://http.download.nvidia.com/XFree86/vdpau/doxygen/html/index.html) and VAAPI (https://github.com/intel/libva). The power of these two APIs is Nvidia supporting VDPAU and Intel supporting VA-API. Another familiar chip manufacturer, AMD, actually provides support based on VDPAU and VA-API. It is really difficult. he. In addition, according to VDPAU and VA-API, VDPAU only defines the hardware acceleration of the decoding part, lacks the acceleration of the coding part (the decoding part also lacks the support of VP8/VP9, and the update status of the API seems to be slow), in addition, It is worth mentioning that the latest state is that Nvidia seems to want to use NVDEC instead of providing a VDPAU interface to provide hardware acceleration on Linux (https://?page=news_item&px=NVIDIA-NVDEC-GStreamer), perhaps soon In the future, VA-API will unify the Video hardware acceleration interface on Linux (so that AMD does not have to support both VDPAU and VAAPI and double-line operations), which may undoubtedly be a boon for Linux users. Excluding VDPAU and VAAPI, the extension part of Linux's Video4Linux2 API defines the M2M interface. Through the M2M interface, the CODEC can be implemented as a Video Filter. Now some SoC platforms have support. This solution is mostly used in embedded. In the environment. The following figure shows the block diagram and decoding process of the VA-API interface under X-Windows: On FFmpeg, the support for VA-API is the most complete. Basically, all mainstream CODECs have support. The details of DECODE support are as follows: The details of ENCODE support are as follows: The AVFilter section also supports hardware-accelerated Scale/Deinterlace/ProcAmp (color balance) Denoise/Sharpness. In addition, as mentioned earlier, the FFmpeg VAAPI solution not only has Intel's backend driver, but also it Mesa's state-trackers for gallium drivers can also be supported, so that AMD's GPUs can be supported. macOS: VideoToolbox The hardware acceleration interface on macOS has also followed Apple's long evolution. Starting with the C-based API used by QuickTime 1.0 in the early 1990s, until iOS 8 and OS X 10.8, Apple finally released the full Video Toolbox. The framework (the previous hardware acceleration interface was not announced, but used internally by Apple), and the now-defunct Video Decode Acceleration (VDA) interface appeared. Video Toolbox is a set of C APIs that rely on CoreMedia, CoreVideo, and CoreFoundation frameworks, and supports encoding, decoding, and Pixel conversion. The basic level of the Video Toolbox and the more detailed related structure are as follows: India ISI plug/BIS standard safety certification power cord Indian plug standard: plug IS 1293-2005/2019, power cord IS-694-2010, rubber wire IS-1293 SABS South Africa Plug wire/SANS 164-1/3: India ISI plug power cord 2C two cores, 3C three cores Wire model: H05RR-F two-core two-core wire, H07RN-F 2C/3C rubber wire, H03RT-H cotton yarn, H05RN-F rubber wire H05VV-F, H03VV-F wire, H05V2V2-F wire 2C/3C: 0.75mm, 1.0MM, 1.5mm2, 2.5MM2 India Plug Type,Indian Plug Type,Bis 2 Pins Plug,India 2 Pins Ac Power Cord Guangdong Kaihua Electric Appliance Co., Ltd. , https://www.kaihuacable.com